About

I am now a Postdoctoral Fellow at Caltech CMS hosted by Prof. Yisong Yue. I am also a researcher at Google.

I received CS PhD degree at UCLA, where I had the fortune to be advised by Prof. Yizhou Sun, and worked closely with Prof. Kai-Wei Chang. I received my CS bachelor degree at Peking University, advised by Prof. Xuanzhe Liu. My research is generously supported by Baidu PhD Fellowship and Amazon PhD Fellowship. I have done internships at Google Brain and Microsoft Research.

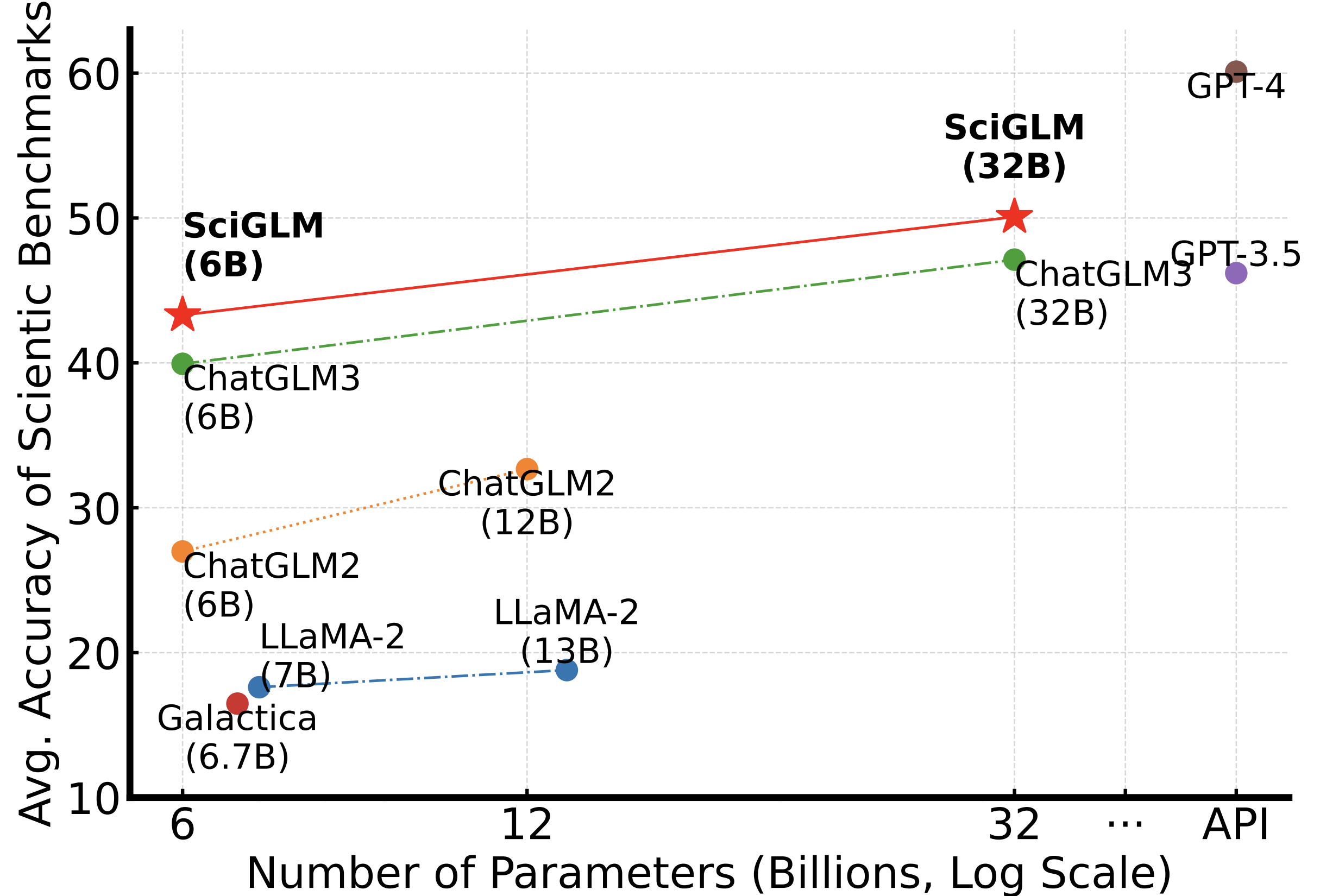

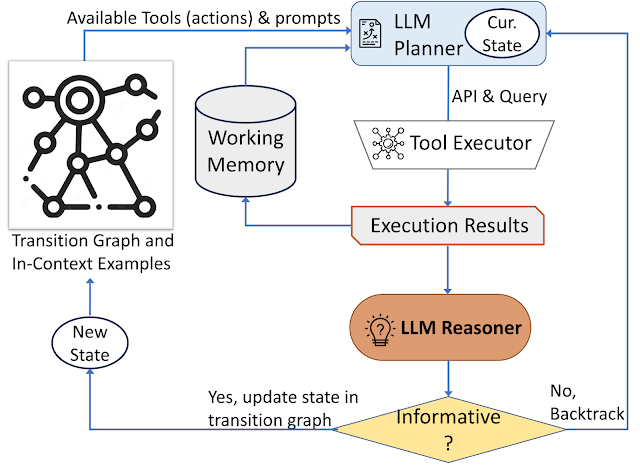

My Recent research focus on Large Language Model (LLM), including Agents (Using Tool, Memory and Graph), Planning and Reasoning (especially on Math, Science, Code and Games) and Self-Improvement.

My long-term research goal is to build Neural-Symbolic AI, with lines of works in:

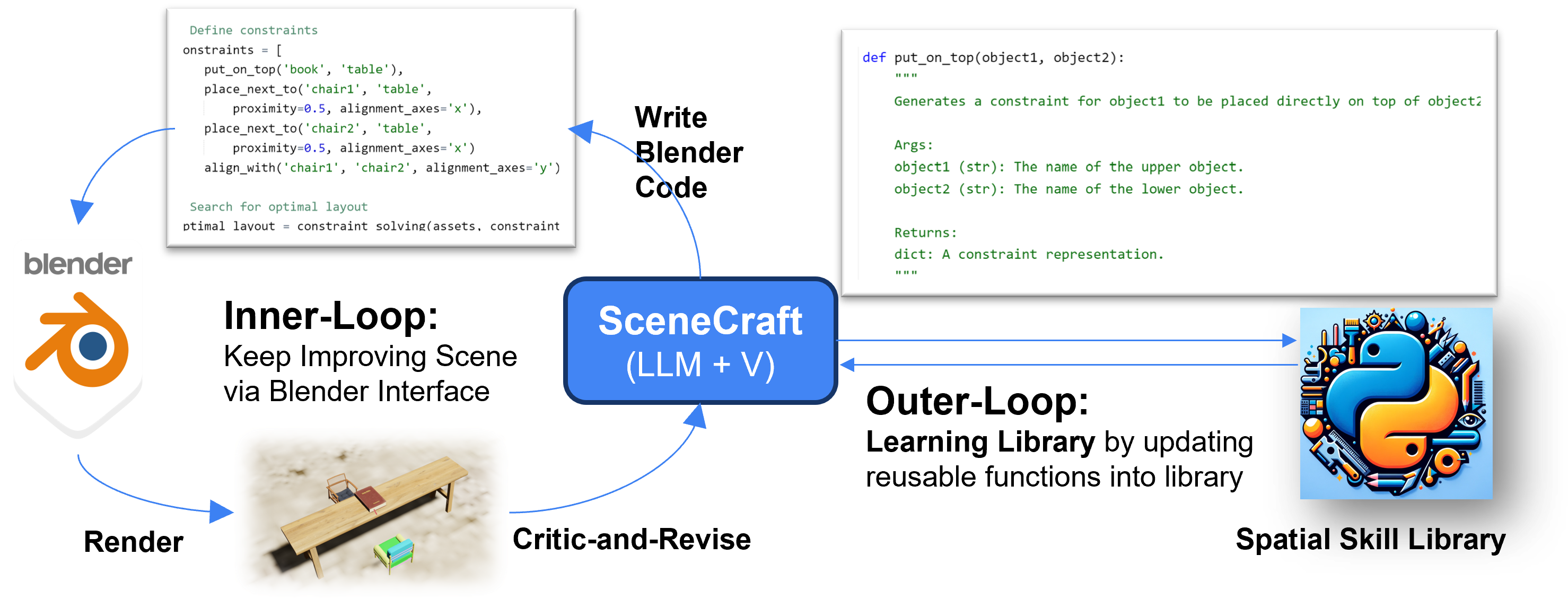

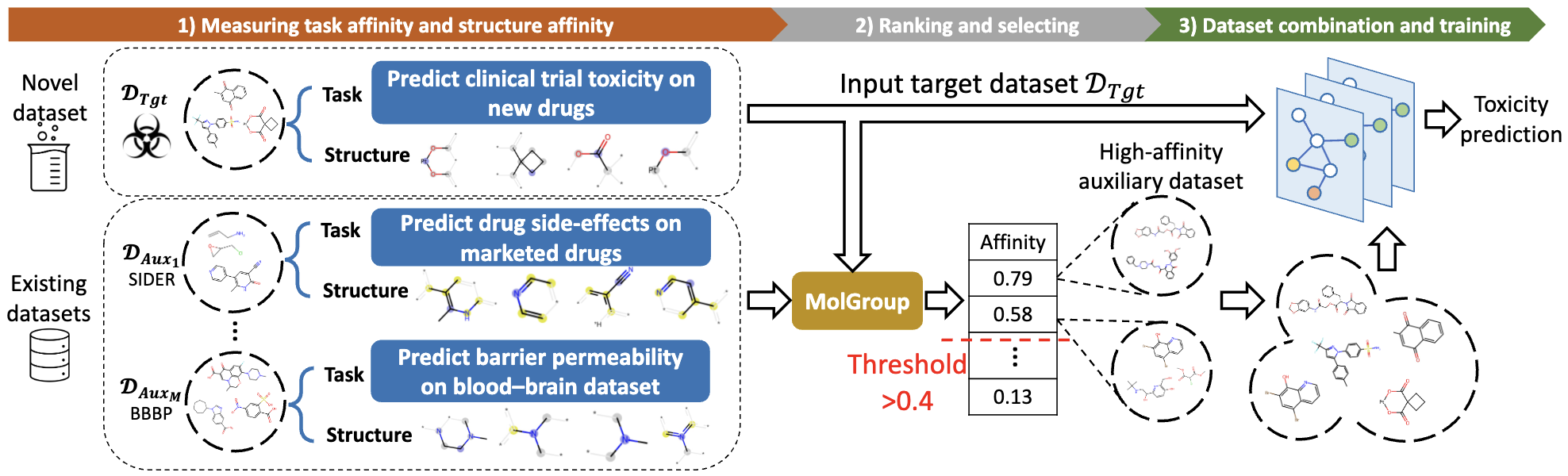

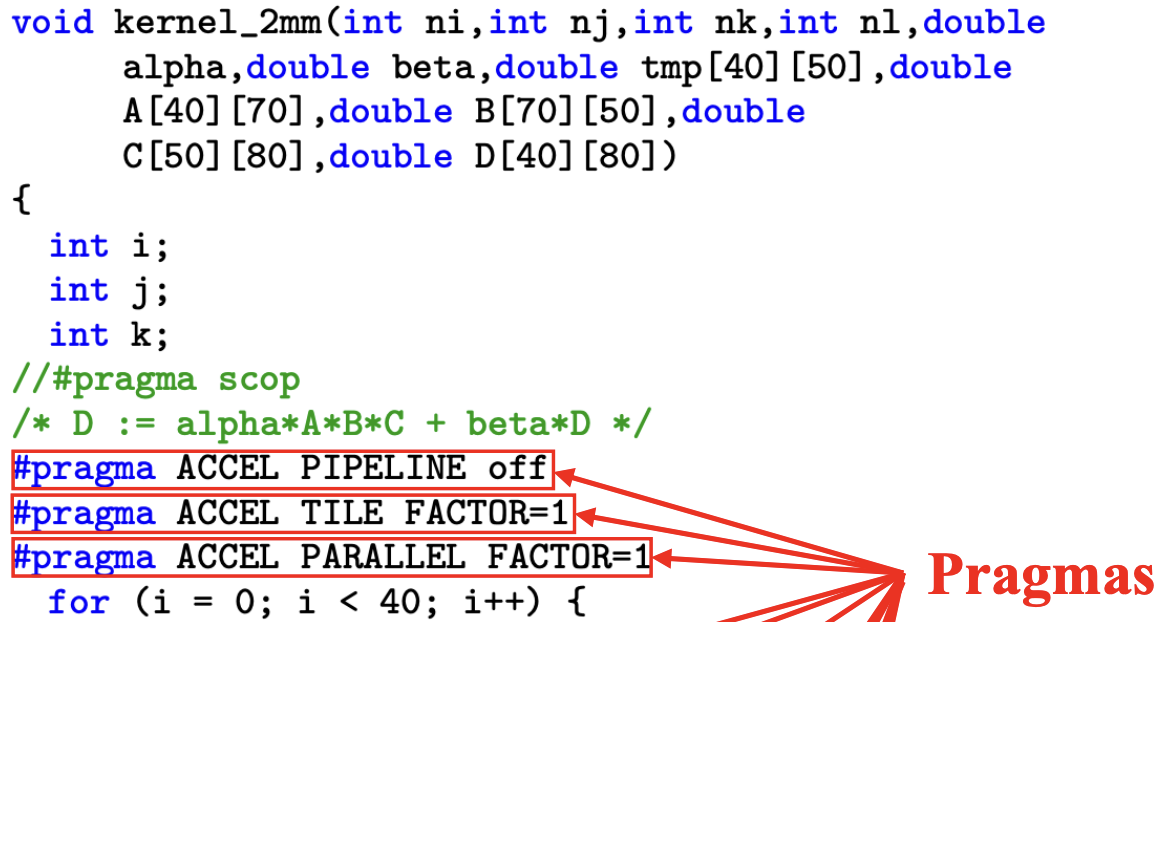

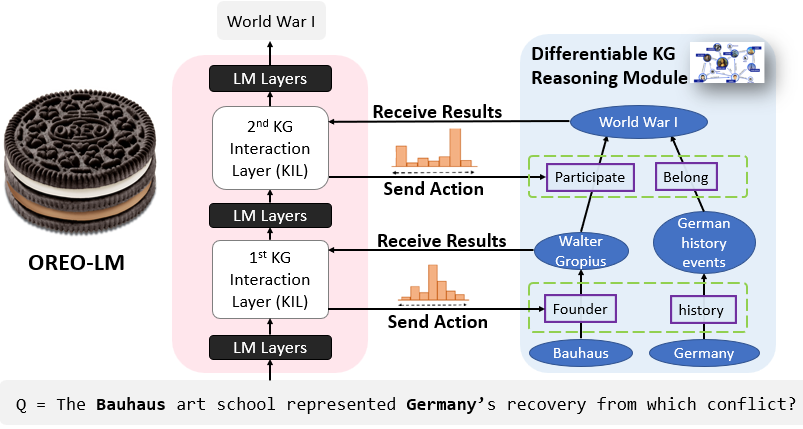

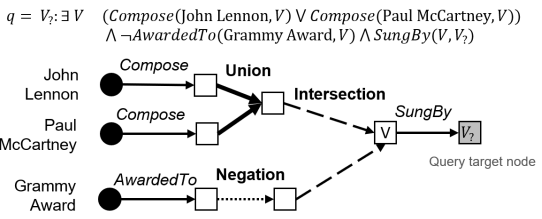

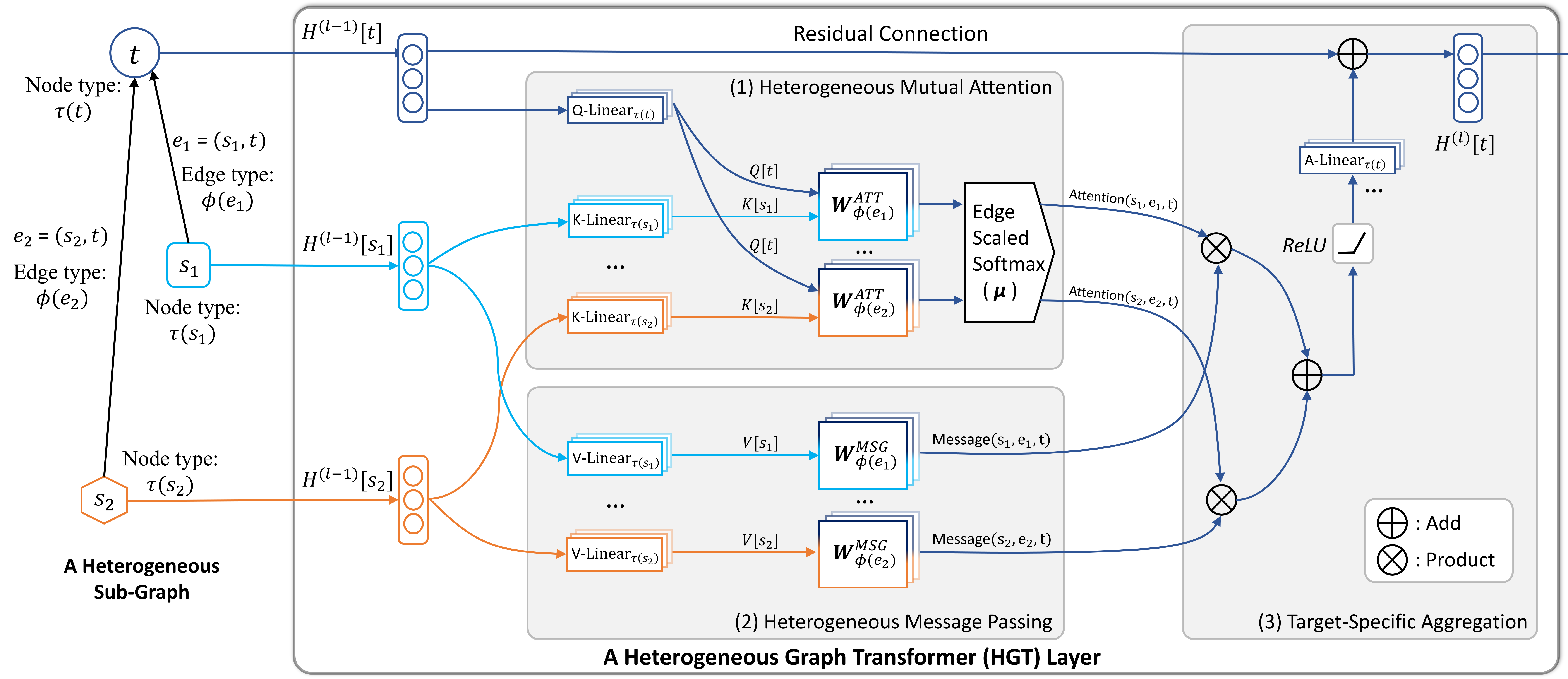

- Differentiable Neural-Symbolic Reasoning Modules including Knowledge Graph Reasoning (OREO-LM, HGT), complex logical reasoning (FuzzQA) and LLM + Tool-use (AVIS).

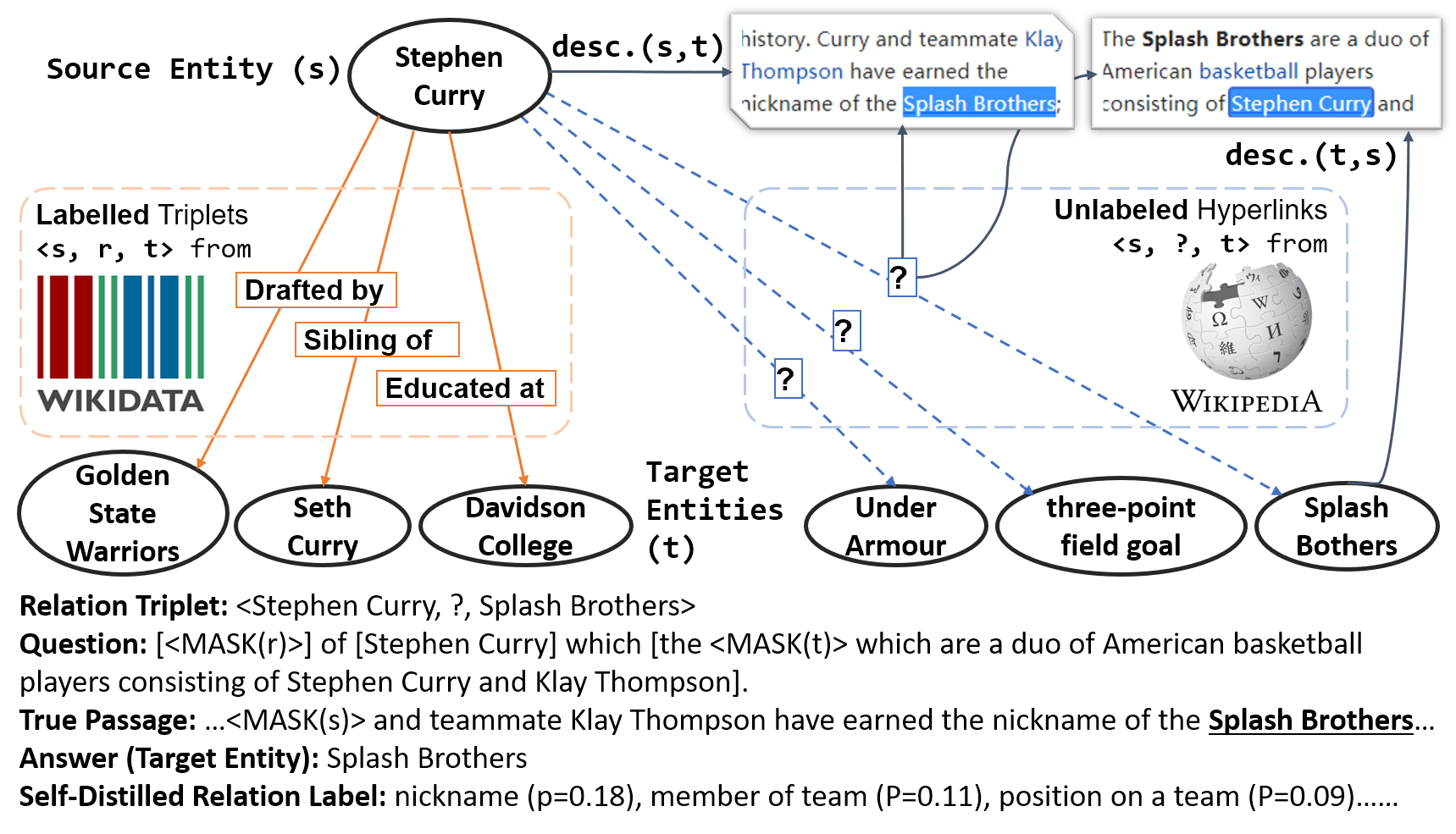

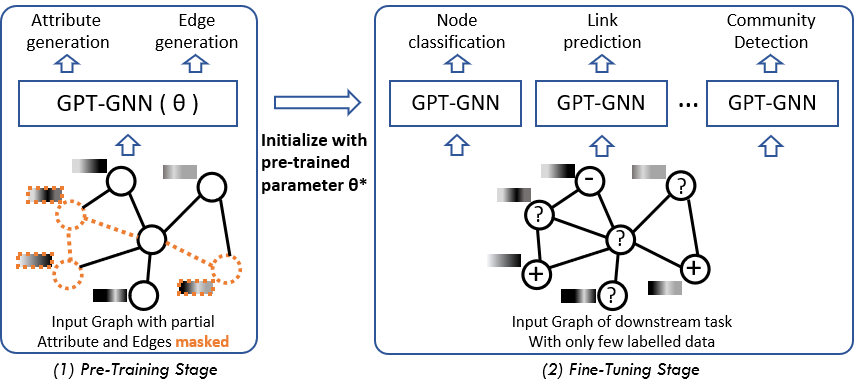

- Self-Supervised Learning from Structural Knowledge (GPT-GNN, RGPT-QA, MICRO-Graph and REVEAL).

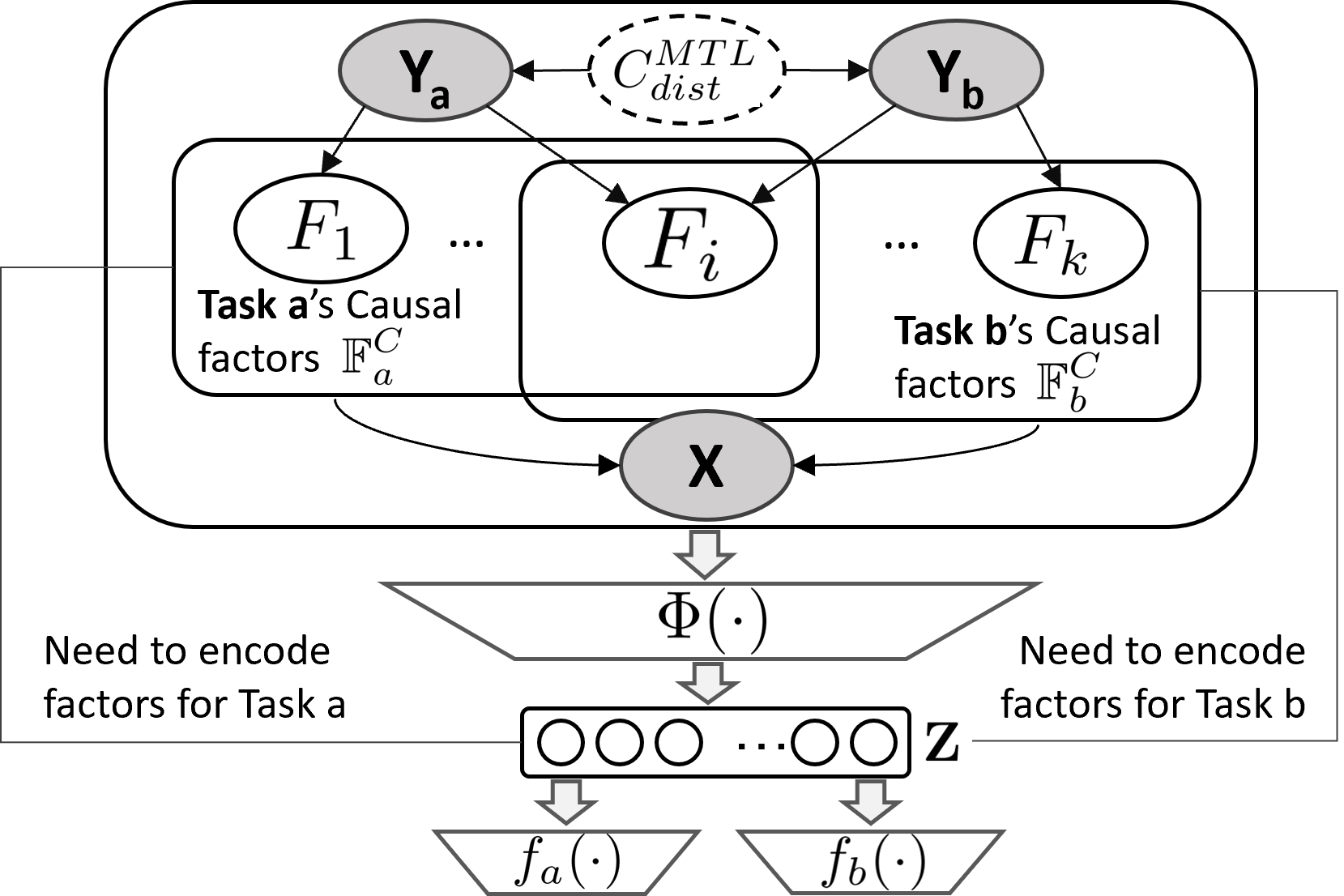

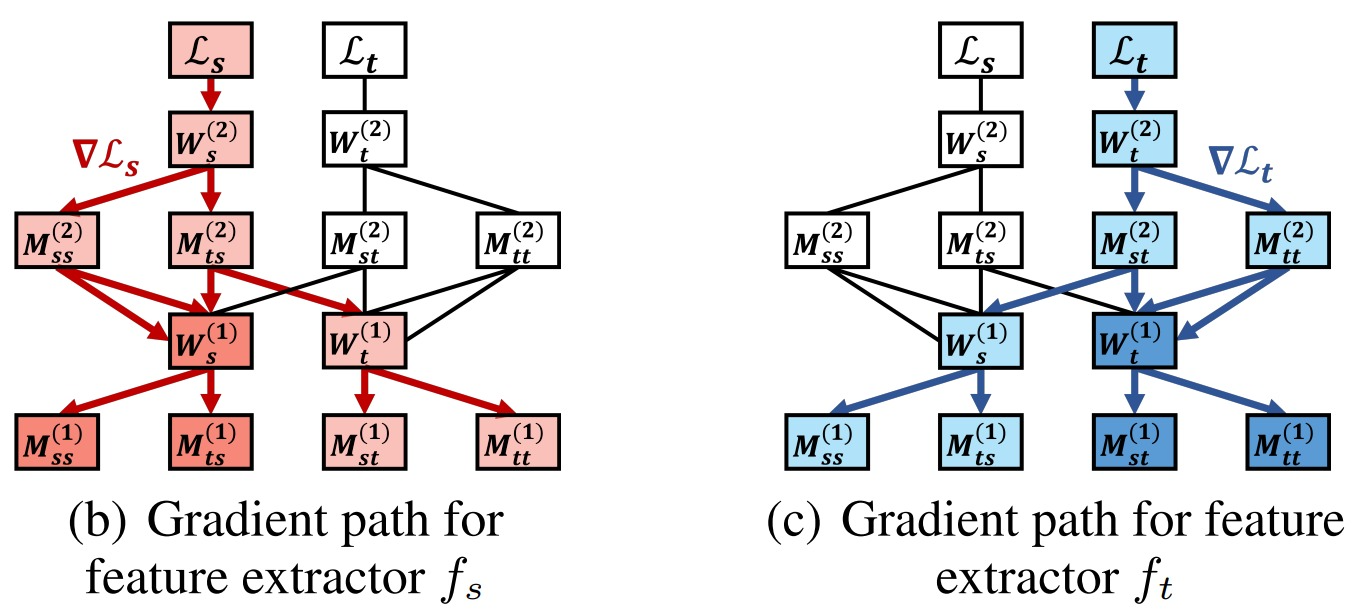

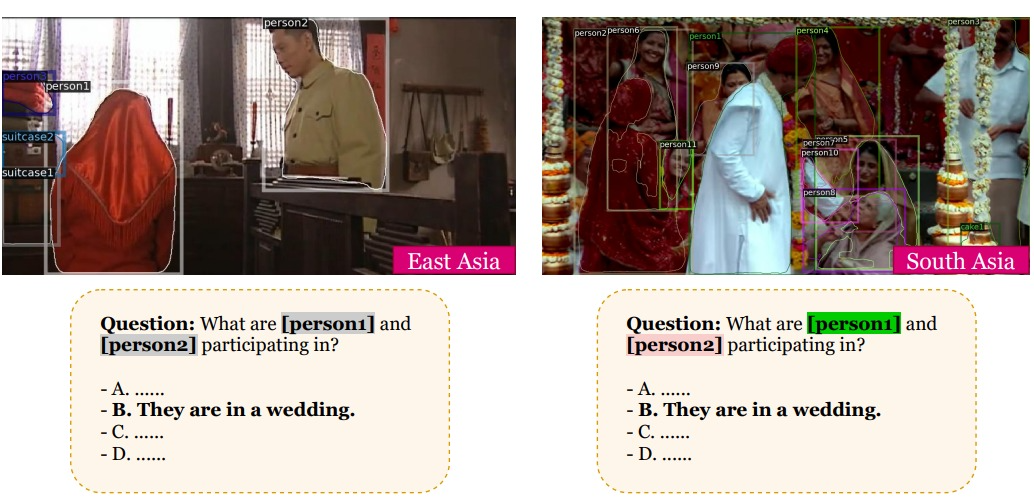

- Improving Generalization for Out-of-Distribution, Out-of-Vocabulary, Cross-Lingual, Cross-Type and Cross-Dataset tasks.

Education

-

Ph.D. of Computer Science

Sept. 2018 -- May 2023University of Calofornia, Los Angeles

-

B.Sc. of Computer Science

Sept. 2014 -- Jun. 2018Peking University

Academic Awards

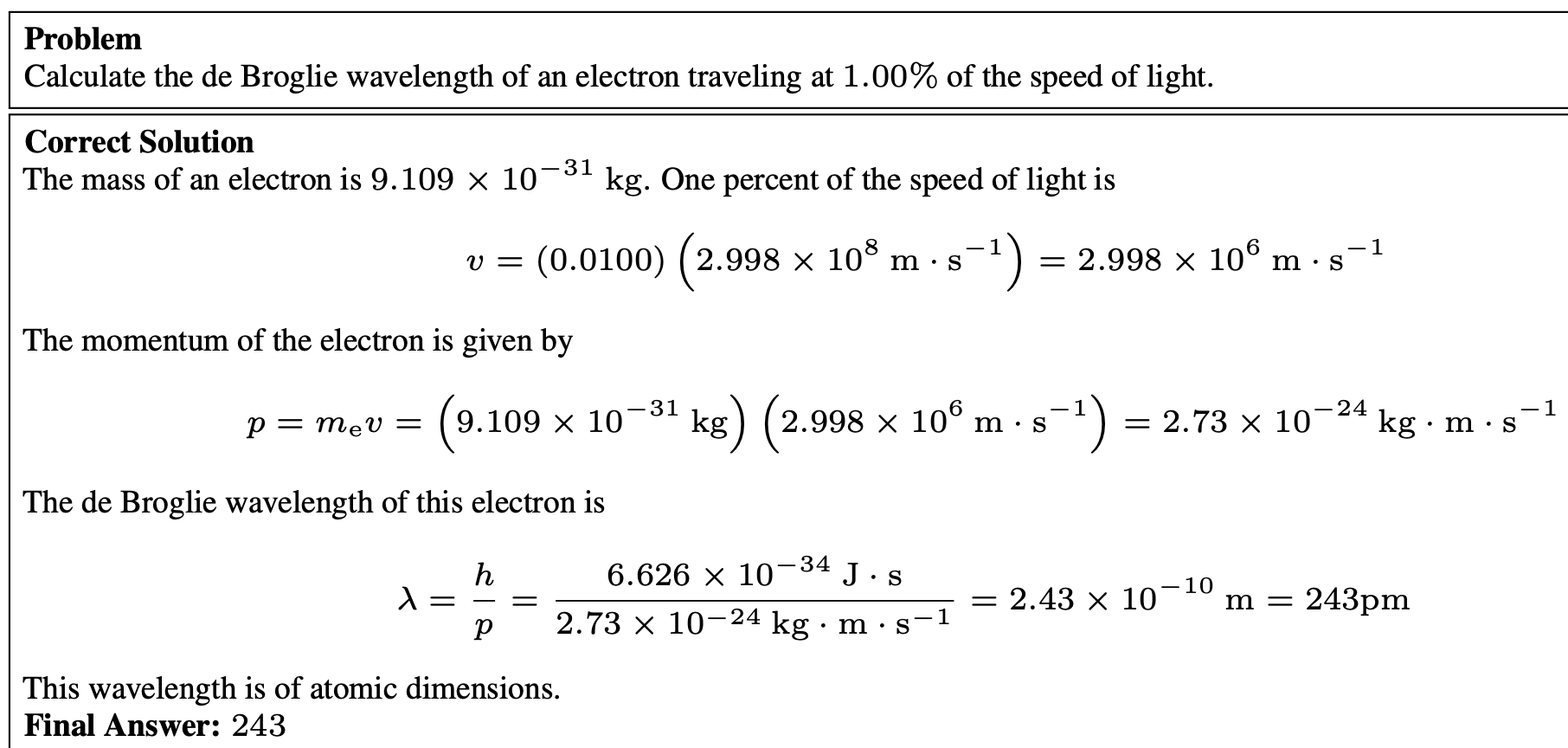

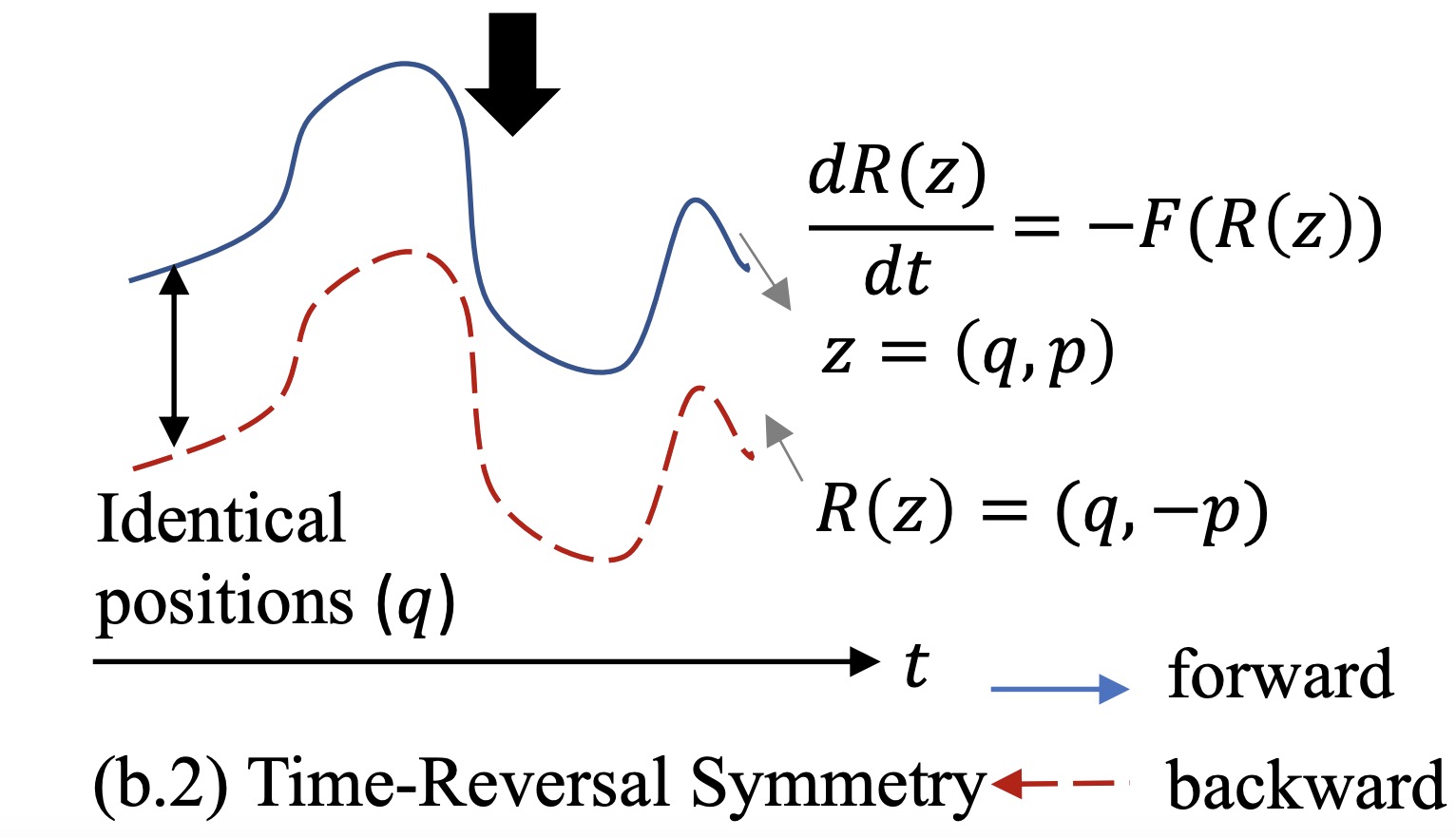

- Best Paper Award, NeurIPS 2023 Workshop (DL + Differential Equation)

- Best Paper Award, SoCal NLP Symposium 2022

- Best Student Paper Award, KDD 2020 Workshop (DL on Graphs)

- Best Full Paper Award, WWW 2019

Services

- Research Track Workflow Co-Chair: SIGKDD 2023

- NeurIPS 2022 Top Reviewer Award

- Workshop Co-Organizer of Tool-VLM @ CVPR'24 and SSL @ WWW'21